Summary

In this eBook we will be examining how ecommerce sites can improve their conversion rate with detailed testing and why so many companies fail to achieve this goal. We will also touch on common mistakes companies make and, nine of the easiest ways that you can improve your conversion rate.

As an at-scale testing solution, these are the lessons we have learned from working with 150+ ecommerce companies both large and small.

These strategies have been formed and collated over many years working with probably 1000s of different releases and dealing with the highs and lows of testing.

Ecommerce Growth

Ecommerce is one of the fastest growing industries, with sales of around $5trillion worldwide in 2021 and in particular, growth of >20% during COVID.

Alongside this, mobile commerce is also growing very rapidly. In 2016, mCommerce was predicted to reach $3.56bn by 2021 – in reality, the figure is much greater at $4.9trillion and with growth to $7.4 trillion by 2025.

A few words about testing

Testing – please don’t turn off now, but testing isn’t the most exciting area – we know that. A “necessary evil” that slows things down, you never know if it is done correctly, and you still end up with problems when you go live anyway.

All in all, enough to keep you awake at night. Can you really trust your QA team not to drop the ball? And what happens to them even if they do drop a clanger? Not much is frequently the answer and at best, a HR telling off.

And then look at the team. Many of them are bored by repeating the same old thing, time and time again, it doesn’t matter if they miss a bug as it will probably never be found anyway!

No matter how good they are, rarely is there any praise, just long days when releases must go out, followed by days when you are trying to find something to do when the development is delayed. Often needing to cram things into a short time, they are under-resourced for the job.

“I spend 60% of my time making sure I don’t get sacked and 20% doing testing”

It’s a recipe for disaster

The same team get the same build and run the same processes (with a bit of change for new functionality) on high-speed releases frequently with time pressure meaning not everything can be done and little penalty if something is done incorrectly.

“I spend 60% of my time making sure I don’t get sacked and 20% doing testing”. A quote from a tester in recent testing meetup. The other 20% is on the internet he reported, waiting.

In essence, many teams spend much of their time ensuring that they have ‘get out of jail free’ cards, in terms of documentation, risk logs or even lessons learnt, in case something does go wrong which of course means that less time is actually spent on doing the job.

What does this mean for your conversion rate?

After reading this eBook, I urge you to go and talk to people. Random strangers, your friends, mates at the pub, your granny. Ask them if they have experienced problems buying something online and how often that happens.

I am sure you will find similar results to our study of people who regularly buy on the internet.

84% said that they had not been able to complete a purchase in the last month.

91% of them said that they went somewhere else to buy or conducted the transaction physically.

My experience is that right now, almost every ecommerce website will have a problem that is blocking some customers purchasing, and those customers are going somewhere else to buy, often Amazon or a competitor.

‘The Amazon Effect’

With a great service and fair prices already, plus a simple interface that works, Amazon is best in class. Looking back to the survey of regular internet purchasers, of the 16% that didn’t report a problem, 4.5% had only purchased from Amazon in their recent memory. So, the actual figure of people without issues is nearer 10% – lucky them.

No, it is not necessary to beat Amazon, but if you have someone on your site, you might as well give them the best experience, so they don’t end up reverting to Amazon or a competitor.

Some useful background but now let’s get down to seeing how to help.

Strategy 1: Regression matters

Firstly, you would be amazed at how many companies who think they have good regression coverage for each release, only to be proven wrong.

Let me give you an example of a recent client experience.

For context, the company had a team of 9 QA, (a Test Manager and two teams of 4, each with a test lead and 3 test engineers). They are a well-known brand with a reputation for a quality product, yet sales on the site were not as expected. A great deal of marketing work had been done to improve experience and senior management were annoyed that this hadn’t shown results. Our engagement was to examine a sprint and then augment our own staff into their team. In examining the first sprint, a great example of missing regression testing and the impact was identified.

Joining the project team on a Teams call (mid-pandemic), for a fairly substantial change, it was planned to run 92% of the regression pack in a 2 week window – using 80 working days (10 on new functionality). Sounds good I thought.

But here is what happened.

- The spec was wrong requiring the BAs to make some changes. The release was delayed.

- Development team were also delayed, so they decided to release some components to QA – part testing, so testing could commence.

- One QA was off sick for a week, another for 3 days.

- A big bug was found in new functionality resulting in the release going back.

- The Test Manager was under significant pressure to report good figures. Having worked at the company for several years, he was aware of short-cuts that could give higher coverage by running modified scripts.

The end result was that only 23% of the regression pack was run but 58% was reported.

The Test Manager took a gamble.

“I’ve been here a while; we can take a few short-cuts and we don’t have any problems.”

Now the release wasn’t a disaster, no one panicked and no customers reported big problems on the release. The Test Manager could take some short-cuts, so he didn’t feel even more heat – he was dealt a difficult card and his modified scripts probably gave more coverage.

The problem is, to quote the Mr Rumsfeld, “unknown unknowns”.

Sure, if there are no sales, you will certainly know about it. But a drop, how big would it need to be? No one knows if a few customers went somewhere else after that release.

What was certain is that we did get to 86% coverage on the next release, 39 defects were raised that were in existing functionality and were found from the scripts and a further 16 defects were found through exploratory testing.

Over time the issues were fixed by the developers and the conversation rate improved. Whilst you may think that this was a particularly bad example. It isn’t!

So, what’s the answer?

Sloppy regression leads to bugs – ensure that regression is always covered to a high degree and use fresh eyes whilst not taking shortcuts.

This does go in hand with the next recommendation.

Strategy 2: Fresh eyes tell no lies – testing resource

Question: Why do spot the difference puzzles only have two pictures?

Answer: Well, it is much harder to tell the difference between 3 pictures. Yet testers are often asked to do this all the time, by testing 3, 4, 5 or even 6 or more devices/platforms. Being asked to test on Chrome, then Firefox, then iPhone 14, then Samsung S9, or simply testing the same software on sequential different releases. They become blind to the changes, learn shortcuts to get the job done quicker and, through no fault of their own, they don’t see the bugs that can be affecting your conversion.

Now I am not suggesting that you switch your QA team in and out easy release, but this is a problem and not solving it will lead to missed issues ultimately leading to lost revenue.

So how does this challenge get solved?

Ideally, you need a transient army of testers to come and augment your existing team. The more the better (effectively what Digivante delivers). But if you don’t want to do that, you need to seek out other options to achieve this. A few ideas to solve this:

- A set of staff who are changed on a 6 monthly cycle.

- Bring in young talent and grow them into different areas of the business after testing.

- Using outsourced consultancies with a commitment to cycle a proportion of staff.

Strategy 3: Testing in country – localisation testing

Following nicely on from that, to maximise conversions, you really need to test in market. The classics are a test team in Slough testing for Saudi Arabia or a team in Chester testing for China.

Let’s be clear, the UK is an isolated space – and we have no idea how different cultures work or what they expect.

Here are a few funnies:

- A well-known high-street brand launched a site in China which put the person’s name in Red once logged in. Unfortunately, in China, this means end of life for that person, so they saw very challenging conversions.

- A global premium fashion retailer with coverage of 35 countries already launched into Hungary in English and on Euro. Only 16% of people in Hungary speak any English and they are not Euro-zone.

- A hotel chain made an error on the currency conversion rates they used when launching a site in China resulting in the ability for users to switch currency and purchase at 1/10th of the price.

Luckily, these were all caught before or just after launch by our team which really underlines the importance of using people in that market to test the product.

It is important to get a view of how those users think, how they interact with your product and if the product fit and presentation is correct, especially before spending £000’s for marketing.

Strategy 4: It pays to pay – payment testing

Whilst testing in market is vital, an important part of that testing should be payment validation. Considering the complexity of international payment systems, some simply don’t consistently work, which is a complete surprise. Part of Digivante’s standard offering for international site testing is to include the option to complete live payments from the top 5 to 10 largest payment providers in that country.

One example is the largest bank in Iceland didn’t connect through to the UK payment provider. Users, using the UK based companies’ site in Iceland frequently weren’t able to transact, with simply an error for the user – not very helpful.

Don’t think you are immune to 3rd parties as well. Another client with ecommerce operations in Germany was surprised to find that payment initiated from their app to Apple Pay were not working. Whilst our client allowed umlaut entries, their Apple Pay integration provider did not support these characters, stripping out address lines. Having gone live, this would have resulted in missing deliveries and problematic postage.

So, the importance in covering multiple countries that you deliver in, but also it is important to cover multiple devices and their payment methods relevant to that country.

Strategy 5: Multiple devices – device coverage

Whilst it is important to cover a range of different payment options, there is also the question of devices. Whilst undoubtedly the device fragmentation problem of 5 to 10 years ago has improved, an average ecommerce site will still have a wide range of devices/browsers arriving at the site and yet some may not convert, due to insufficient testing.

This is setting fire to money, your team have done the hard work of getting someone onto the site, frequently ready to buy, only to send them away because the site doesn’t work effectively for their device.

One of the key issues is that mobile traffic from iPhone or iPad is simply categorised as simply ‘Apple iPhone’ or ‘Apple iPad’. The ambiguity of these device descriptions is challenging for any website administrator or ecommerce manager to identify key devices or behaviours on those devices due to the various models out there, with varying hardware capabilities that could certainly affect the experience and consequently online purchase.

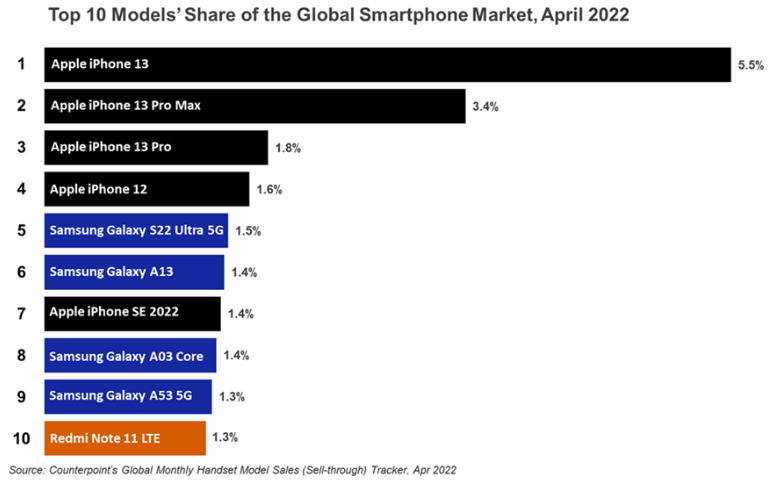

For example, if you compare an iPhone 13 with an iPhone SE, which are both popular devices, as per the charge below, however, the screen size of 6.68 inches vs 4.7 and frequently RAM differences, which can lead to a very differing user experience.

To bring these things together, with circa $105bn available in the ecommerce, the 4th largest ecommerce market, and with consumption online scheduled to increase, the potential is great.

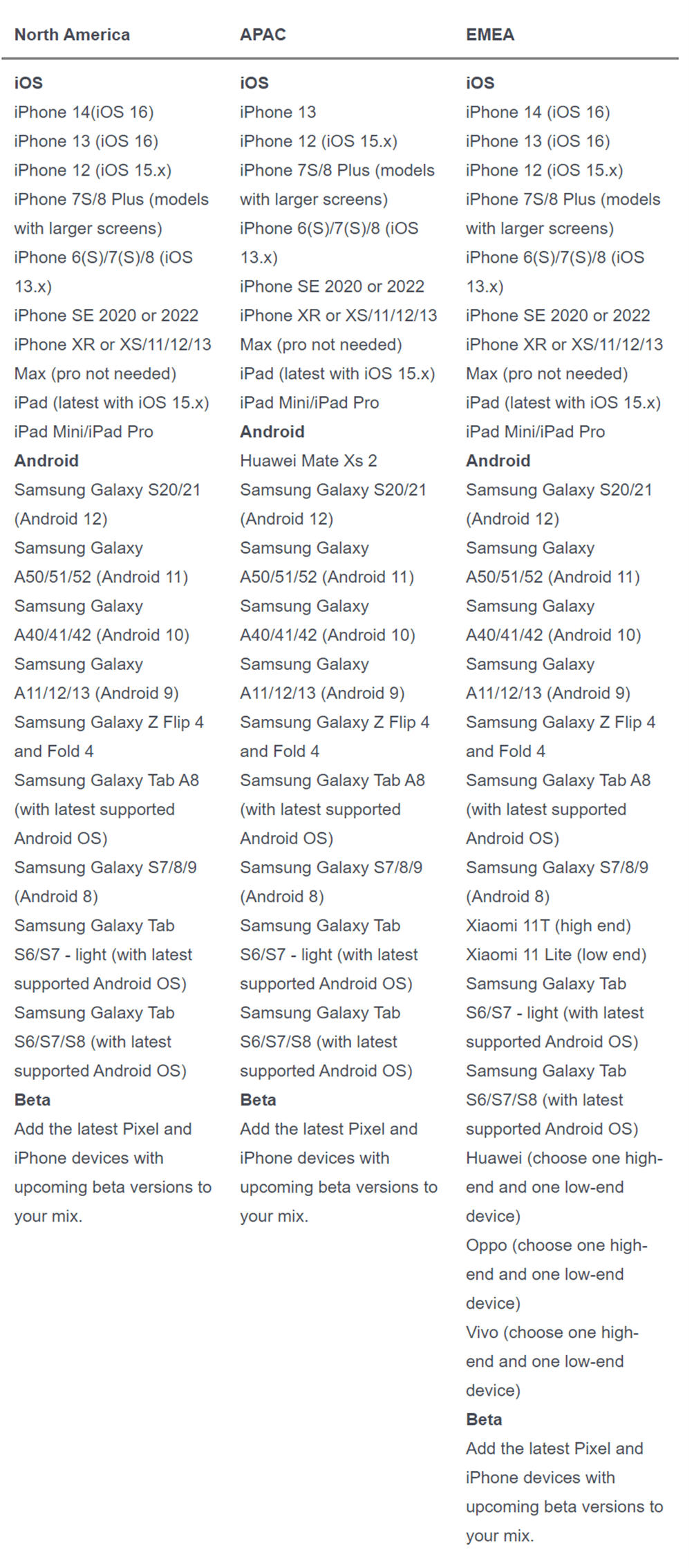

However, to cover this market, you need to cover a range of browsers on different platforms (Windows and Apple Mac, including Safari, Chrome, Firefox, and Edge). Additionally, with 50%+ of traffic coming from mobile devices, how many devices do you cover 10, 15, 20 and what about different IOS and Android versions?

With a small team, it can be tricky to cover a few devices, let alone the range of devices that the market dictate. This interesting list from Sauce labs shows some of the devices you should be considering:

Source: Sourcelabs

Strategy 6: Traffic doesn’t equal revenue

Despite the challenges discussed above in strategy 5, relating to the number of devices that will be hitting your site, there is no correlation between devices and revenue, so it does make most sense to focus on the devices and platforms that bring you the most revenue.

By working with 100’s of ecommerce sites, Digivante have identified a few key trends:

- iPhones tend to generate more revenue than Android, whilst they account for less traffic.

- Apple Mac platforms are a small percentage of traffic but typically have a much better conversion rate than other desktop platforms.

- Chrome and Edge tend to convert better than other browsers, but there is not that much of a difference.

Yet, few companies consider this when defining the devices that they test on. Yet, this is clearly a mistake.

As they say, follow the money.

Furthermore, when analysis is done on a typical ecommerce site using Digivante’s advanced ecommerce technology, we have discovered time and time again that minor fixes to issues introduced during the development process, which manifest with a slightly different experience on similar devices, will dramatically increase revenue.

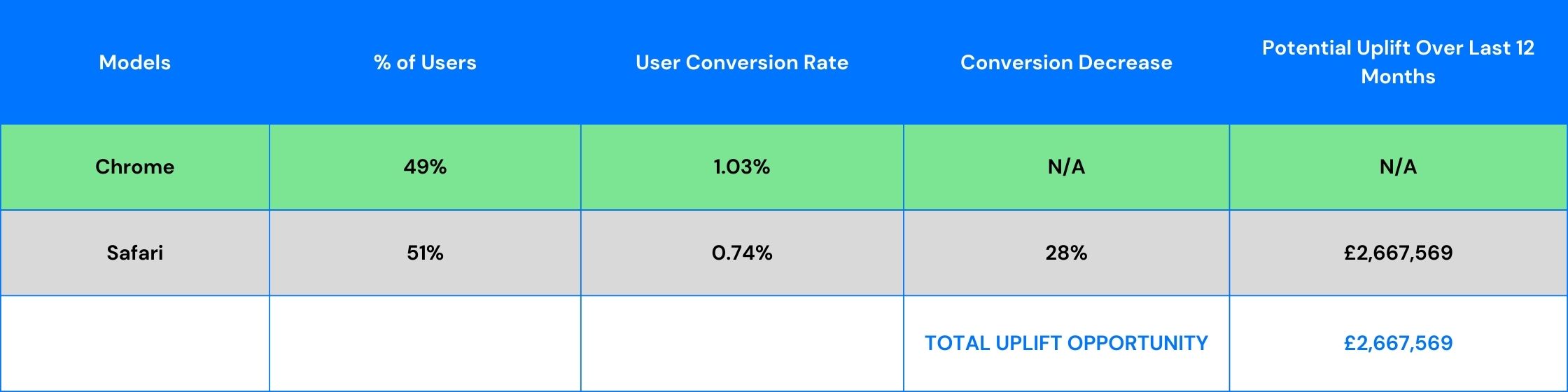

Let me give you an example. A travel company primarily focussed on store operations, had an eCommerce site turning over around £13m from desktop, of which £3m was coming from Apple Mac platforms. Most testers testing on a Mac would cover off Safari latest version, however this company saw 49% of its traffic from Chrome on Mac. Yet, the Chrome platform converted the best at 1.03% vs 0.74%. It was later understood that Mac was never tested, simply the Chrome platform worked better as that had been tested.

Cutting a long story short, if the conversion on Safari could be increased to match Chrome (why wouldn’t it, they are both users of Macs), then the financial uplift would be £2.7m.

So, after some testing by Digivante, focussed in on the two platforms, were able to identify a range of issues that were resulting in a lower conversion rate. Once fixed, the company was able to see an increase in their conversion rates on Safari Mac. You can see this information below:

So, whilst many ecommerce leaders look at the various levels of traffic, to improve conversions, you need to go one step further and look at showcase performing platforms find the platforms that aren’t performing so well to fill in the gaps to maximise potential of large jumps in revenue.

A lot of improving revenue is really understanding your customer and that ties in well with the next recommendation.

Strategy 7: Conduct usability testing relating to your users

Perhaps the best example of this I have seen was from a prestige car retailer who, as a business, had grappled with its internet presence with a number of internal team members having a strong view on how the site should be.

The site was designed to show-case new and previously owned vehicles, typically with a price of £100,000 to £340,000.

However, the site was having a range of conversion blockers with low levels of interest driven from the site.

Digivante was engaged to support a turnaround to the site, understanding that there would be push back to any changes that we recommended. With a brief to locate these high-value individuals and get to understand their expectations and usage of the website, we took our brief and went to our community to find the right fit.

On collating the results, they were striking in the direction that they led. The site had a section of new models, then a smaller menu option for Used Vehicles and it was this that was annoying users for the following reasons:

- Most people using the website would likely be new to the brand, however they typically didn’t buy a new vehicle straight out. They were looking for a previously owned vehicle, yet this was difficult to find in the menu structure, and the filter process was poor once you got there.

- No one liked ‘Used Vehicles’ as these were people buying a ‘used vehicle’ often in the £100k+ price bracket.

- The view was that the ‘Used’ section just didn’t sell the prestige nature of the brand.

Collating strong statistical feedback, the company did decide to change the way that this section worked and navigation to it. They changed the wording to ‘Pre-owned’ with an increased presence on the website. This made a big change to users’ expectations, and the company has iterated further on this strategy introducing even more functionality in this area and seeing a much better performance.

Usability can be as big as site design, or as small as categorisation. Another client who sold hair products had categorised one product as a hair product, but users weren’t searching for it there. A simple change in category increased the sales by circa £500k a year.

So every release should have detailed user testing both pre and post launch. In fact, Digivante work with a number of organisations doing regular competitive analysis, where blind testing is done against our client and their two 2 competitors to see who has the edge in usability. This information is often vital to define new functionality to keep up with and then over-take competitors.

Strategy 8: Don’t extend deadlines

Perhaps the easiest and hardest tip to follow from this list for any company is simply not to extend deadlines.

However, I hear you saying, “not so easy”.

But this, perhaps singlehandedly, creates the most challenging situation for QA.

There are so many excuses:

- Designs were wrong

- Scoping wasn’t done correctly.

- Developers off sick

- 3rd party integration challenges

- ‘Jim’ is on holiday

And many more. But frequently these delays don’t move the release date, or if they do, not to a sufficient degree.

This creates a squeeze on QA and an intense pressure on QA to fix the ‘short-term’ release problem.

Time and time again, the QA team are put under pressure to ‘deliver’. This leads to:

- Short-cuts

- Reduced coverage

- Long hours, even weekend working

- Stressed staff

The above all lead to one thing. Less testing.

Even if the window isn’t compressed, things will pop up in the testing window leading to reduced time available.

In fact, it is paradoxical. Because the test team can’t complete everything, they will be concerned about what they might not have done, and typically, for a tester, this is done through increased documentation, in essence, documenting what hasn’t been done.

This leads to sometimes, more time spent on what hasn’t been done, than been spent on doing what needs to be done.

So, this strategy says that it is vitally important to maintain the window that testing scheduled. No doubt there will be delays of one form or another, and you really did want that coverage that the QA proposed that they could deliver.

Ultimately, with a potential loss of £000’s or maybe millions of £’s, it’s just too big a risk to skimp on the testing.

Which leads onto our 9th strategy.

Strategy 9: Don’t accept risk

Testing is all about risk. If we weren’t worried about risk, we would simply push and pray on releases. So, if you are committed about quality and improving conversion rates, you have got to manage the risks when you release.

What does that mean in practice?

Well, it is relying on the due diligence that good QA can bring, despite the pain from other areas.

Wrapping up, the key points from this guide include:

- Ensure that the regression pack is run to an acceptable level.

- Ensure that you have the correct test coverage run by the right people.

- Avoid the push and pray mentality.

However, it is more than that. It is also about avoiding the introduction of risk into the process at all stages. Focus on providing the BAs with the time and specifications that they need, hire the right developers and look after them, don’t let the business make rash late decisions and smooth the ship. This will peculate into quality and quality will minimise risk. A minimised risk will lead to people doing their job correctly and that will naturally improve the conversions as customers will enjoy using the product due to lower number of issues they will encounter.

You may need to introduce a few policies like user-testing, but even if you do that, if the release is running at high risk, then there is a minimal benefit in doing this.

To conclude

Driving up ecommerce revenue can be challenging, but many ecommerce companies are leaving money on the table by simply not ensuring that the focus is on their users and the quality of their products.

Knowing your customer means understanding their device and understanding what they expect. If you match this with the right products, delivered in a platform that works well because of correct QA and strong development processes, then conversion rates will naturally improve. After all, there is nothing magic in what Amazon does.