Ecommerce no longer starts where brands expect it to

Online shopping rarely begins on a homepage anymore. For many users, the first interaction with a brand now happens inside an AI response, a short-form video, or a social feed. By the time someone reaches a website or app, they are often already mid-decision. They arrive looking for confirmation, not persuasion.

This shift does not apply equally to every category. In practice, AI-led discovery appears strongest when users are trying to solve a specific problem or make a functional choice. Products like food, supplements, tech, utilities, or household items lend themselves well to recommendation-led journeys. Browsing-led categories such as fashion or home décor still rely more heavily on visual inspiration and brand feel. That distinction matters, because it changes which journeys carry the most risk, and which pages users land on first.

In 2025, Digivante supported over a thousand test cycles across ecommerce, travel, automotive, beauty, and lifestyle brands. Our global tester community delivered more than 70,000 hours of real-world testing, uncovering hundreds of critical issues and thousands of quieter defects before they reached customers. What stood out was not an increase in obvious breakages. Sites rarely crashed. Apps generally loaded. The real risk appeared elsewhere, in moments of hesitation, uncertainty, and friction that stopped users from moving forward.

When product pages become the front door

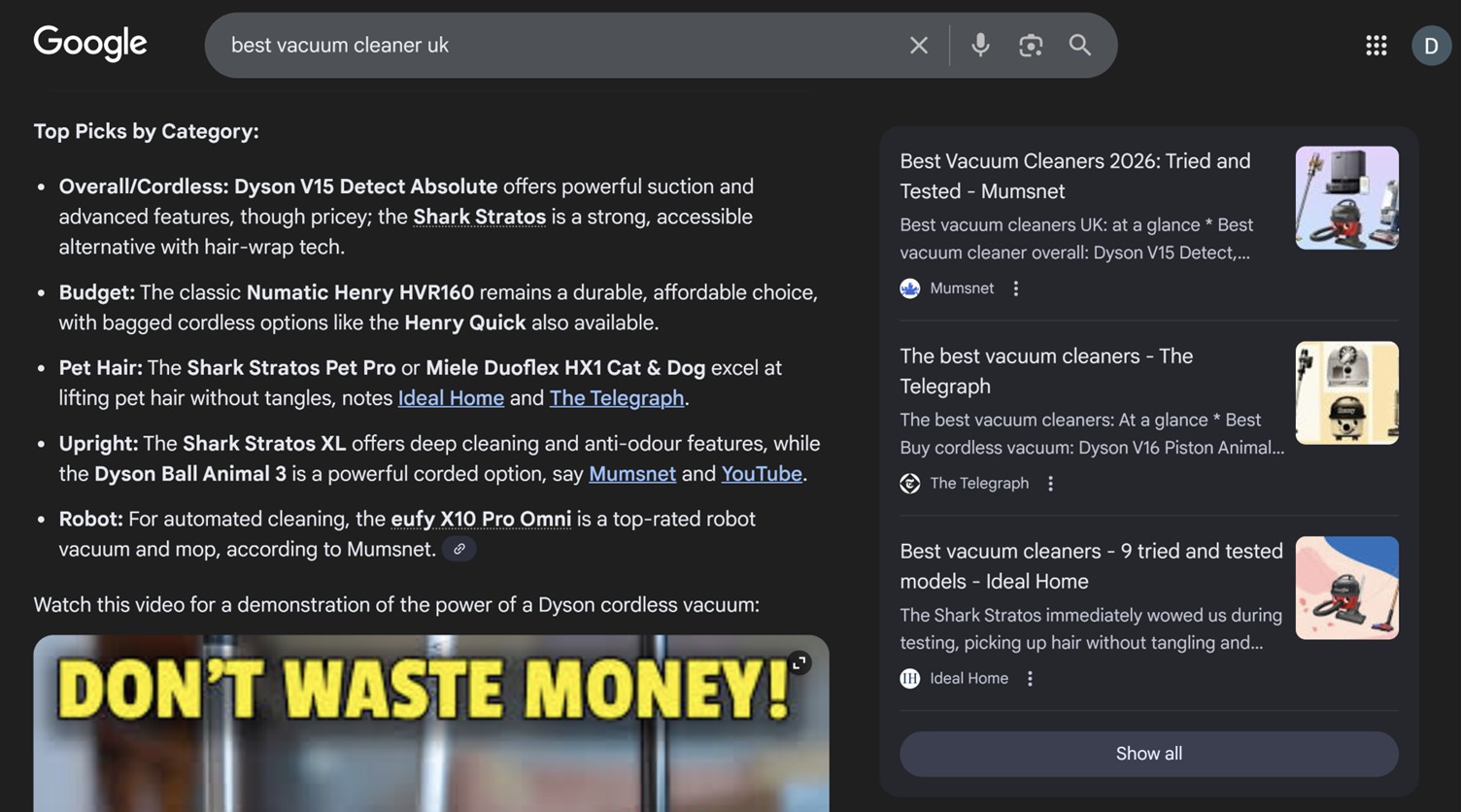

AI-led discovery tools increasingly summarise products, compare options, and answer buying questions before users ever see a brand site. This shifts the role of the website itself. Product pages replace homepages as the primary entry point.

In testing, we consistently see users land deep within journeys, with little patience for missing detail. If delivery costs are unclear, returns feel ambiguous, or sizing information takes effort to find, trust drops quickly. These journeys often look healthy in analytics. They fail only when watched through real human behaviour.

This is where traditional success metrics start to mislead. Pages load. Buttons work. Conversion drops anyway.

Impulse buying leaves no space for friction

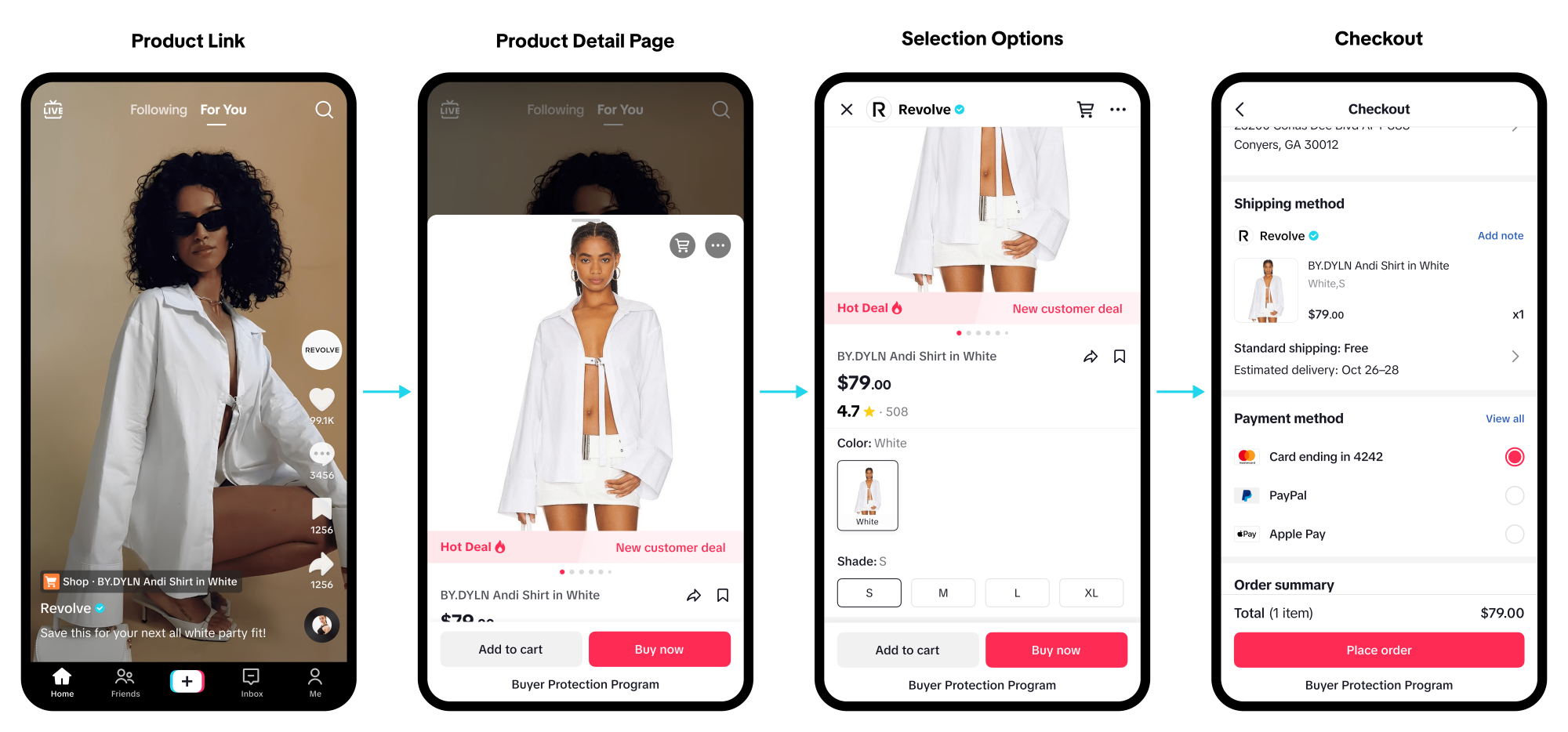

Social commerce has reshaped expectations. Platforms like TikTok have trained users to buy in app, quickly, often without planning. That behaviour does not stop at the platform boundary. It carries into websites and apps.

What’s changed is not just speed, but where confidence is formed. Influencer-led video recommendations increasingly act as the trust layer, particularly in categories like fashion and beauty where authenticity and peer validation matter. Recent research found that 27 percent of fashion and beauty shoppers have purchased luxury items directly through social platforms, signalling that confidence is often established before a user ever reaches a brand-owned product page. In these journeys, the role of the website or app is no longer to convince, but to avoid disrupting that confidence through friction, inconsistency, or failure at checkout.

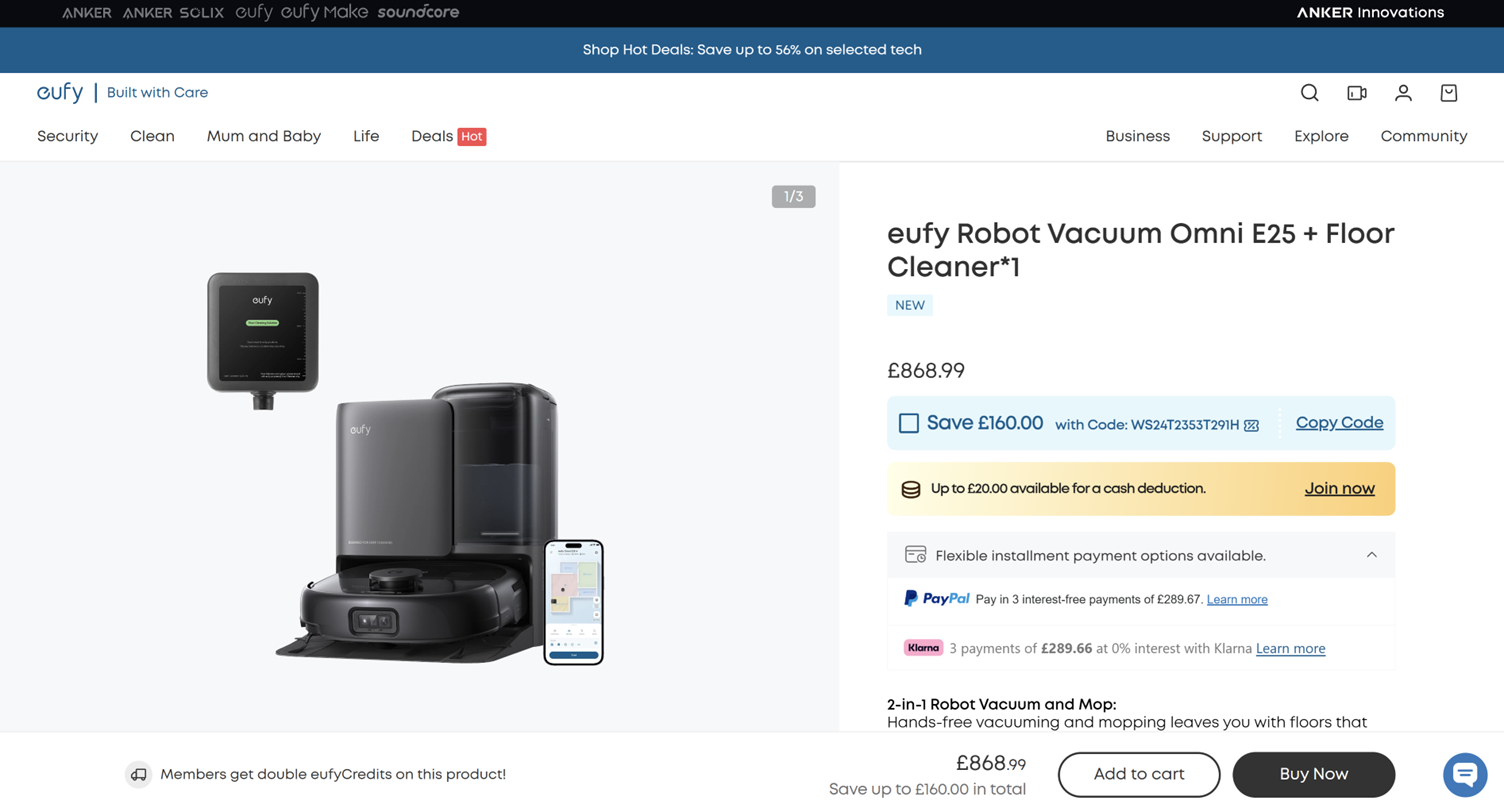

Tolerance for friction has dropped sharply. In-app browsers behave unpredictably. Interruptions are common. Mobile edge cases matter more than desktop perfection. These issues rarely appear in scripted tests. They surface when real users attempt real purchases, often with limited time and attention.

Social commerce also blurs traditional ownership boundaries. A user may discover, decide, and even initiate a purchase inside a platform, while fulfilment, payments, and support sit elsewhere. From the user’s perspective, this is a single journey. From a testing perspective, it expands where things can quietly fail, especially when teams optimise individual touchpoints rather than the experience end to end.

This can shift risk away from long journeys and toward short, fragile moments where failure is immediate.

AI introduces inconsistency in ecommerce, not clarity

AI now shapes recommendations, search results, summaries, and form completion. While this improves speed, it also introduces inconsistency. In testing, this often appears as conflicting information, missing context, or data that technically passes validation but feels wrong to the user.

One of the less visible impacts of AI-led discovery is the loss of a consistent starting point. Search results, AI summaries, and recommendations now vary based on user behaviour, past interactions, location, and prompt structure. Two users searching for the same product may be shown entirely different options, messaging, or routes into a site. From a testing perspective, this challenges the assumption that journeys are stable and repeatable.

We are already seeing this reflected in client briefs, with teams asking testers to begin journeys from natural search results rather than predefined URLs, acknowledging that discovery itself has become part of the risk surface.

Trust relies on consistency. In 2026, maintaining that consistency becomes harder, especially when AI-driven elements behave differently across users, devices, or sessions.

These are not defects in the traditional sense. They are behavioural mismatches caused by inconsistencies in what users see, which only surface when users interact with the system and respond to those experiences.

Decisions happen faster or not at all

User behaviour continues to compress. People skim, decide, or leave. There are fewer chances to recover attention. Small UX flaws now carry disproportionate weight. Unclear language, weak error messages, or ambiguous next steps often trigger exit, even when nothing is technically broken.

These are not classic defects. They are confidence killers. They only emerge when users are observed, not when systems are checked.

Global journeys quietly fragment

Expansion continues, but control reduces. One release creates hundreds of local experiences. Payment behaviour, address logic, content visibility, and legal expectations vary by region. Many of the most damaging issues we uncover only appear in live environments, with real users, real devices, and real payment methods.

This is where assumptions about “one fix” or “one test pass” start to break down.

Where digital risk now lives

In 2025 alone, Digivante delivered the equivalent of decades of in-house testing effort through real people and real journeys. What this volume highlight is: modern customer experience rarely fails loudly.

In 2026, CX fails subtly. Automated checks confirm that things exist and respond. Manual, real-user testing reveals how people actually experience them.

The teams that perform best will not test more for the sake of it. They will test more realistically, following modern shopping behaviour wherever it starts, and identifying where things break down.